Artificial intelligence (AI) has fast become a competitive necessity in today’s business environment. At the heart of many AI-driven solutions are language models, powerful tools that can process and generate human-like text. These technologies are transforming how businesses handle documents, data, and decision-making.

But the success of any AI implementation hinges on one critical factor—data quality. Without accurate, well-structured data, even the most sophisticated language models can falter. In this guide, we’ll break down the difference between large language models (LLMs) and small language models (SLMs), and why choosing the right model, paired with high-quality data, is key to unlocking the full potential of AI for your business.

Jump to:

What is a Small Language Model (SLM)

Benefits of small language models

Examples of small language models

What is a Large Language Model (LLM)

Benefits of large language models

Examples of large language models

What is a language model?

A language model is a type of AI developed to understand, create, and predict human language. Basically, a language model is taught by being fed vast amounts of text—from which it picks up on grammar, patterns, and context of words and phrases. The model then uses that knowledge to write sentences and paragraphs, respond to questions, translate languages, and more.

What is a small language model?

A small language model (SLM) is—as you might guess from its name—a language model that operates on a smaller scale. These AI tools are crafted to be focused and efficient for the specific tasks they’re designed for.

SLMs are generally faster, more affordable, and easier to train, making them ideal for specific, targeted tasks. In applications like intelligent document processing (IDP), SLMs can be purpose-built to drive essential functions such as data extraction from documents, document classification, optical character recognition (OCR), and natural language processing (NLP). SLMs can accurately extract information from a wide range of document types while using less computing power and having a smaller environmental footprint.

What is a large language model?

A large language model (LLM) is an AI with the capacity to understand and generate human language at an impressively high level for a number of different purposes. Subtle nuances, intricate patterns—these models can pick up on those tiny details and often come up with creative, insightful responses.

LLMs are equipped to perform a huge variety of tasks. A single LLM, for example, could be used to write a multi-language invoice, or even assist in drafting clinical notes for healthcare providers. Since LLMs are built with billions of parameters, they need more computational power—but they’re able to use that power to create unique content, mimic detailed conversations, and more.

Benefits of small language models

Bigger isn’t always better. Small language models (SLMs) offer quite a few advantages that make them the perfect choice for certain business processes. Let’s look at five key benefits that make these compact AI tools stand out:

Fast performance

If you need specific tasks completed in a flash, SLMs may be your best bet. These tools learn from a smaller, more targeted data set of information that is directly relevant to the tasks they’re expected to carry out. That makes SLMs easier to teach and faster to operate—with a lower environmental impact to boot.

For example, SLMs are often used in IDP to quickly and accurately identify, sort, and extract data from documents that come into a business. Other uses for SLMs include chatbots that provide instant customer service and real-time translation services that keep conversations flowing, to name just two.

Budget-friendly

AI tools don’t always require deep financial resources. SLMs, for example, are more cost-effective to create and affordable to run than their larger counterparts. Even bootstrapped startups have the opportunity to harness the power of AI without a huge price tag.

Tailored to specific organizations

Every organization has different needs, and SLMs can be purpose-built to fulfill those needs, whether they have to do with legal forms or healthcare appointments. These models can be educated on your specialized information to become experts in your niche. One size doesn’t have to fit all.

Accuracy

When you need focused, reliable results, SLMs are often the better choice. Because SLMs are taught particular tasks, they can be more accurate than much larger models, but at a fraction of the processing time. SLMs can understand the context of nuanced questions and generate unique responses that are relevant and meaningful within the areas of their expertise. In fact, a growing number of businesses are opting for SLMs over larger, more general models to meet their unique needs.

Easy to update

Businesses constantly have to adapt to changing times, so your AI tools need to be able to roll with those changes. SLMs are easier to develop and update, which means you can more easily keep them current with the latest information and industry trends. Your model can stay relevant and continue to provide valuable information, even as your company evolves.

Benefits of large language models

Trained on massive amounts of data, large language models (LLMs) can generate creative text, answer complex questions, and understand context and nuance. However, LLMs can also sometimes "hallucinate," generating plausible-sounding but incorrect or nonsensical information. Despite this limitation, the potential benefits of LLMs can make them a powerful tool for businesses and individuals alike.

Vast knowledge base

LLMs have learned from a mountain of information, so they have a broad understanding of language and the world. These models can answer a broad range of questions, summarize many types of information, and even come up with creative content with remarkable accuracy.

Adaptability and flexibility

LLMs are incredibly versatile. While general-purpose, open-source LLMs like ChatGPT and Gemini, among the best known, can also be tailored and pre-trained for specialized business purposes. For example, LLMs can be fine-tuned to pull information from paperwork or help companies stay on top of changing government regulations. Businesses can customize LLMs to perform a diverse array of industry-specific operations, too.

Multilingual capabilities

Many LLMs can understand and generate text in multiple languages. This is an especially helpful feature if your company is located in multiple countries or works with international vendors and clients. Communication and collaboration can happen across borders with greater ease.

Knowledge synthesis

LLMs are adept at handling and making sense of large amounts of information—useful when dealing with tasks that need a deep understanding and detailed analysis. For example, LLMs can review thousands of legal documents and summarize the key points, so lawyers can get a comprehensive overview without having to read every single document in full. This ability to gather and connect complex information makes LLMs useful for thorough research.

Enhanced creativity

LLMs are particularly adept at generating professional content due to their extensive education on diverse and comprehensive language data, including various industry-specific terminology and communication styles. But having good data to work from is key to getting good results from an LLM, particularly in a professional setting. With access to the right data, and retrieval augmented generation (RAG) to connect LLMs to external knowledge sources, an LLM can draft compelling marketing copy, generate insightful reports, or even produce personalized email responses—all while maintaining a consistent brand voice. Their advanced language capabilities allow them to produce persuasive and informative outputs, making them valuable tools for business communication and content creation.

Small vs. large language models: What are the differences?

Size and complexity

Large language models (LLMs) hold a much larger amount of knowledge than small language models (SLMs). That’s because LLMs have a vast number of parameters—often in the hundreds of millions—making them highly advanced and capable of handling a broad spectrum of tasks.

However, LLMs can also be slow and resource-heavy, taking up to 50 times longer to process information compared to smaller models. In contrast, SLMs are purpose-built to be more streamlined, with parameter counts between 1 and 10 million. This design makes SLMs quicker and more efficient to train and use.

Cost and resource requirements

SLMs run like efficient compact cars, while LLMs operate more like big trucks. Because LLMs require a lot more computational power and memory, they can be rather expensive to run. LLMs can also create substantial environmental impacts due to their high energy consumption. SLMs, on the other hand, are more lightweight, use less energy, and can often be run on standard hardware.

Capabilities

LLMs excel at an extensive array of tasks, delivering accurate and fluent results. In contrast, SLMs are specialized for particular applications, which lets them perform well while using fewer resources and costing less.

For instance, a small language model tailored for accounts payable can zero in on the exact information your AP team needs, skipping all other irrelevant data out there. This focused approach not only speeds up processing but also often delivers accuracy comparable to much larger models, all while being lighter on your resources.

Examples of LLMs and SLMs

Small language model example

SLMs are transforming industries with their targeted expertise and efficiency. An SLM tailored for intelligent document processing (IDP), for instance, can enhance optical character recognition (OCR) in document processing. This not only speeds up the time needed for the workflow but also reduces errors compared to manual methods.

Large language model example

LLMs are the driving force behind popular AI tools like ChatGPT, capable of handling a wide range of tasks from brainstorming ad campaigns to translating forms. That said, LLMs can also be fine-tuned by training on billions of parameters precisely related to a specific task. For example, in the medical field, an LLM could be fine-tuned on a vast dataset of medical literature and patient records to become a specialist in answering complex medical queries.

RAG LLMs & SLMs

What is retrieval-augmented generation (RAG)?

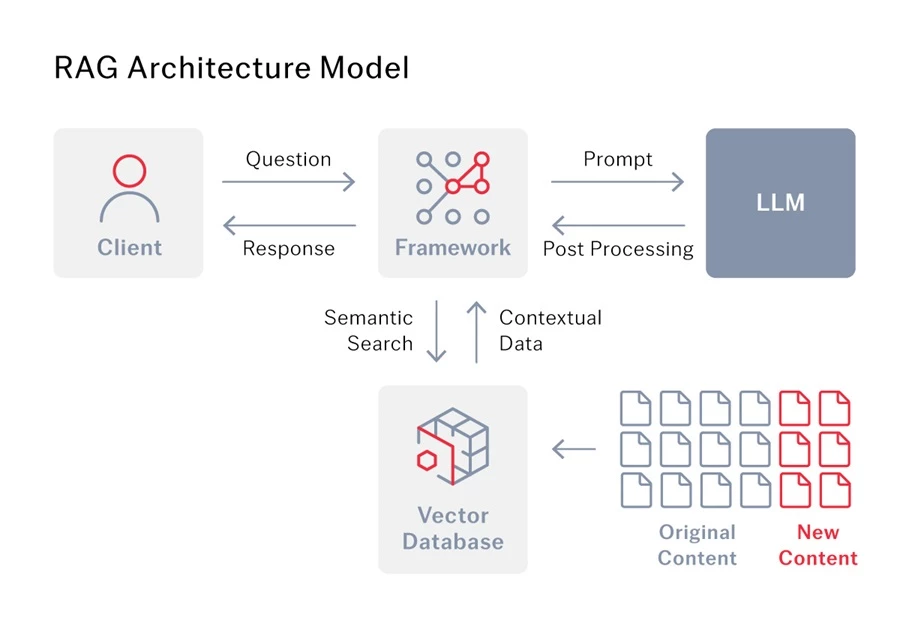

Retrieval-augmented generation (RAG) is an advanced AI method that allows language models to give better answers. Usually, language models have to rely on just the information they’ve been trained on. But with RAG, the model also gets access to a separate knowledge base or database and can use that extra information to generate more accurate responses.

RAG for LLMs

LLMs are great at generating text, but their knowledge can become outdated. Retrieval-augmented generation (RAG) solves this problem by linking LLMs to external sources of information, like the internet or specialized databases, so it can always access the latest and most relevant facts to answer your questions.

But RAG doesn't just improve accuracy. By combining the information it finds with the context of your question, RAG helps the LLM understand your needs better. This leads to more nuanced and relevant responses, reducing the chance of hallucinations. High-quality data is key here, as it provides the LLM with the deeper context it needs to generate truly insightful answers.

Let’s take intelligent document processing (IDP) as an example. When a business uses IDP alongside an LLM, RAG acts as the connector between the two. Essentially, RAG allows the LLM to access and use the data extracted by IDP, which provides the LLM with the data needed to deliver more precise outcomes.

Choosing the right language model for your needs

The right language model depends on what you want to use it for. LLMs are ideal for tasks requiring vast amounts of contextual understanding, but SLMs are better suited for specific, focused tasks and are often integrated into larger platforms to handle specialized functions efficiently.

In fact, you may already be using SLMs in the tools you rely on. ABBYY’s IDP platform, for example, leverages SLMs for tasks like OCR, alongside other AI technologies like machine learning and image semantic segmentation. These tools work together to help automate processes and enhance accuracy, making your workflows more efficient.

Combining LLMs and SLMs

Reveal Group, an award-winning intelligent automation services company, needed a solution to help its customer extract 350+ fields from lease-related documents, which arrived in varying states of quality, document type, format, and language. To solve this challenge, Reveal Group trialed several technology combinations across five different approaches and landed on the combination that yielded a vastly superior result: LLMs and SLMs, in the form of ABBYY Vantage and GPT-4 Turbo, alongside Blue Prism robotic process automation.

Together, GPT4 and ABBYY Vantage created a consolidated user experience, providing results before any field-level training. This approach leverages IDP for classification and segmentation and GenAI for field extraction. Integrating generative AI, IDP, and RPA to automate data extraction and entry achieved an 82% accuracy rate. For the small subset of fields falling outside the configured confidence threshold, ABBYY Vantage’s manual review station is utilized to enable human users to correct machine-extracted values. The machine learning model learns from the human-corrected values and improves over time, resulting in reduced manual review requirements. Read the full Reveal Group case study here.

It’s all about data quality

Ultimately, the quality of the data you feed into whichever language model you employ determines how precise and reliable the outcomes will be. To effectively connect your data with the language model you choose, RAG is essential. ABBYY’s retrieval-augmented generation works seamlessly with language models to provide accurate, contextual, and up-to-date responses. By integrating with your existing knowledge base, ABBYY’s RAG ensures your AI has the context it needs to generate smart, reliable answers.

Get in touch with one of our experts to learn how ABBYY can make your language model’s responses more timely, relevant, and tailored to your business.